The rapid development of Artificial Intelligence (AI) has revolutionized many industries, from healthcare to finance and entertainment. As AI systems become more complex, the need for effective AI testing has become critical. AI testing presents unique challenges that are distinct from traditional software testing.

The process of testing AI models is intricate, requiring a deep understanding of both the technology and the methodologies that ensure their proper functioning. This guide will explore the main challenges faced in AI testing, offering solutions and best practices to address them.

The importance Of AI Testing

It is essential to understand the importance of testing AI systems to ensure their reliability, accuracy, and fairness.

- AI models, such as machine learning algorithms, are increasingly being used to make decisions that can affect people’s lives.

- Whether it’s a recommendation system, a self-driving car, or a medical diagnostic tool, it is crucial that these AI systems perform correctly.

- The challenges in AI testing arise because AI systems are designed to learn from data and adapt to new situations.

This dynamic nature makes it harder to predict how AI models will behave in all possible scenarios, which increases the complexity of testing.

The Dynamic Nature Of AI Models

It is important to recognize that AI systems are constantly evolving. Unlike traditional software, which follows predefined rules and behaviors, AI models learn from data over time. This characteristic makes AI testing significantly different, as the behavior of an AI model can change after each training session.

The challenge here is ensuring that AI models are robust enough to handle new data, unforeseen situations, and errors without negatively impacting the user experience. This dynamic nature makes testing more challenging, as testers must account for these continuous changes.

Lack Of Clear Benchmarks

It is often difficult to set clear benchmarks when testing AI models.

- Traditional software can be tested using well-defined metrics such as performance, speed, and functionality.

- However, AI models rely heavily on data and are evaluated based on how well they predict or classify outcomes.

- The absence of standard benchmarks complicates the process of determining whether an AI system is performing as expected.

This leads to challenges in testing accuracy, fairness, and reliability.

The Need For Large And Diverse Datasets

The quality of data used to train AI models directly affects the performance of the model. AI systems require vast amounts of diverse data to learn and generalize well. It is important to test how the AI handles edge cases or unseen data, which is often not included in training sets.

The challenge is ensuring that AI systems are tested on a representative set of data, which includes various conditions, environments, and scenarios. Testing with incomplete or biased data can lead to inaccurate or biased results.

Overfitting And Underfitting

It is important to prevent overfitting and underfitting when testing AI models. Overfitting occurs when a model performs well on training data but fails to generalize to new, unseen data. Underfitting, on the other hand, happens when a model is too simple and does not capture the complexity of the data.

AI testing must ensure that models are neither overfitted nor underfitted. This can be challenging, as models must be fine-tuned to balance these two extremes while maintaining high accuracy.

Ensuring Robustness

It is essential to test the robustness of AI systems to ensure they can handle real-world conditions. AI models must be tested under various scenarios to evaluate how well they respond to unexpected inputs or adversarial attacks.

The challenge lies in creating test scenarios that simulate a wide range of real-world conditions. This includes testing for unusual or malicious inputs that may be used to exploit weaknesses in the AI system.

Handling Continuous Learning

It is important to consider that many AI models are designed for continuous learning, meaning they are constantly adapting and updating based on new data. While this allows AI systems to improve over time, it also adds complexity to testing.

The challenge is ensuring that AI systems continue to function correctly as they learn and evolve. Testers must monitor the system’s performance and ensure that new data does not introduce unforeseen problems or biases.

The Difficulty Of Automating AI Testing

It is often difficult to fully automate AI testing. Traditional software testing can be automated using tools that execute predefined test cases. However, AI systems are more unpredictable and require manual intervention to ensure proper testing.

The challenge is developing automated testing frameworks that can handle the dynamic nature of AI models. Testers must create intelligent testing tools that can adapt to changes in the system and identify potential issues effectively.

Resource intensive Testing Process

AI testing requires significant computational resources, as training and evaluating large models can be time-consuming and expensive.

- Testing AI models can require access to powerful hardware, such as GPUs, and vast datasets to ensure the system is tested thoroughly.

- The challenge here is managing the resources needed for AI testing.

This includes budgeting for hardware, storage, and cloud services that are necessary to run extensive tests.

Testing AI Systems in Real-World Environments

It is important to test AI systems in real-world environments to understand how they will perform outside of controlled test settings. Testing in the real world allows testers to evaluate AI models under realistic conditions, which helps uncover potential issues that might not appear in the lab.

The challenge is simulating real-world environments and ensuring that AI systems can operate effectively across diverse conditions.

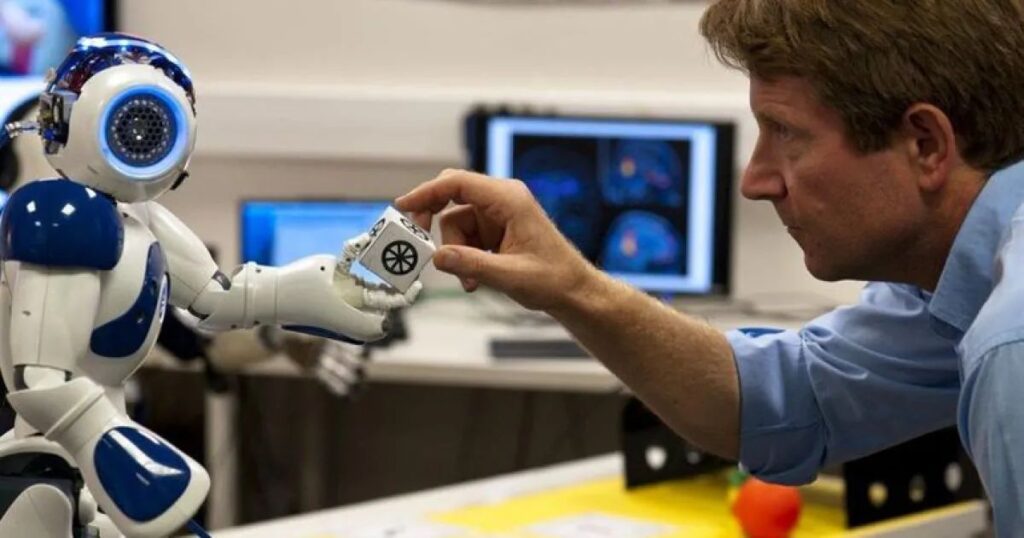

The Role Of Human Oversight

It is essential to have human oversight when testing AI systems. Despite their capabilities, AI systems are not perfect and may make mistakes or act unpredictably. Human testers can help identify issues that might be missed by automated tools or by the AI itself.

The challenge is integrating human oversight into the testing process without slowing down the overall development cycle. Finding the right balance between automation and manual testing is key to ensuring AI systems are thoroughly tested.

Testing AI Ethics And Compliance

It is important to test AI systems for ethical considerations and regulatory compliance. Many AI applications, especially in healthcare, finance, and law enforcement, must adhere to strict ethical and legal standards.

The challenge is ensuring that AI models comply with these regulations while also meeting performance goals. This includes testing for fairness, accountability, and transparency in decision-making processes.

Lack Of Standardized Testing Frameworks

It is crucial to recognize that AI testing lacks a standardized framework, making it difficult to compare results across different models and systems.

- The absence of standardized protocols leads to inconsistencies in AI testing practices. Transparency in AI testing is crucial for building trust.

- Clear processes allow users to understand how decisions are made.

- The data used for testing must be accessible and well-documented.

- Transparency helps to identify and fix biases in AI models.

The challenge is developing universally accepted standards for AI testing. This would allow for more effective evaluation and comparison of different AI models.

AI Model Evaluation Metrics

It is necessary to develop appropriate evaluation metrics for AI models. Unlike traditional software, AI models are evaluated based on performance metrics such as accuracy, precision, recall, and F1 score.

The challenge is selecting the right metrics to evaluate AI models effectively. This requires an understanding of the specific use case and the desired outcomes.

Transparency in AI Testing

It is vital to ensure transparency in AI testing. The testing process should be open and verifiable to ensure that the results can be trusted. Transparency in AI testing is crucial for building trust. It ensures that AI systems are fair and accountable. Clear processes allow users to understand how decisions are made.

The data used for testing must be accessible and well-documented. Transparency helps to identify and fix biases in AI models. It also ensures that AI systems operate as expected. Clear communication about testing methods builds confidence in the results. It allows for better collaboration among developers, testers, and users.

Frequently Asked Questions

Why is AI testing so challenging?

The complexity of AI systems, the need for large and diverse datasets, and the lack of clear benchmarks all contribute to the challenges of AI testing.

How can AI testing be improved?

AI testing can be improved by developing standardized testing frameworks, ensuring continuous learning, and incorporating human oversight to identify issues that automated tests might miss.

What is the role of data in AI testing?

Data plays a critical role in AI testing, as the performance of AI models depends heavily on the quality, diversity, and quantity of data used during training and evaluation.

How can AI bias be addressed in testing?

AI bias can be addressed by using diverse, representative datasets and continuously monitoring AI systems for fairness and equity throughout the testing process.

Conclusion

The challenges of AI testing are numerous and complex, but they are not impossible. With a thoughtful approach, including the use of ethical considerations, and continuous evaluation, it is possible to ensure that AI systems function as intended and provide reliable results.

By understanding these challenges and developing effective solutions, organizations can improve the reliability and fairness of their AI applications, fostering greater trust and adoption of AI technology in the real world.